Event-Based Neuromorphic Vision

Stereo Vision

Portable Biomedial Aid Device "NeuroGlasses"

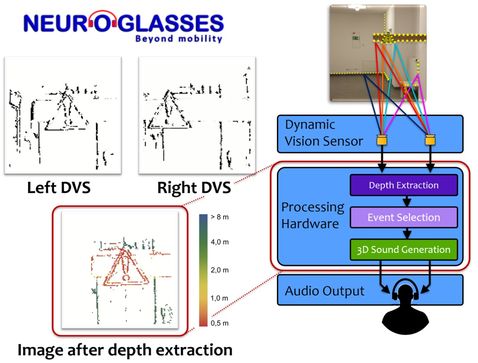

"NeuroGlasses" [1] is an electronic travel assistant (ETA) to help blind and visually impaired people to orient and move through their environments. Right now, the traditional long (white) cane is still the most commonly used mobility aid, even though it has several limitations (such as short range, limited resolution, and the inability to detect obstacles higher than hip-level). NeuroGlasses aims to improve mobility for visually impaired people. It achieves this by computing distances to objects using two DVS cameras, and by translating this information into easy to understand 3D audio signals which intuitively convey the positions of obstacles to the user. It is a hands-free, light-weight device with exceptional battery lifetime due to the use of event-based DVS technology, which only needs minimal power and whose data streams enable the deployment of lean and compute-efficient real-time processing algorithms. This project won the first price at the TUM IdeAward Business Startup Competition 2014 [2-3].

Contact: Lukas Everding, Jörg Conradt

- V. Ghaderi, M. Mulas, VF. Pereira, L. Everding, D. Weikersdorfer, J. Conradt: A wearable mobility device for the blind using retina-inspired dynamic vision sensors. Intl. Conf. IEEE Engineering in Medicine and Biology (EMBC), 2015

- http://www.tum.de/wirtschaft/entrepreneurship/tum-ideaward/ideaward-2014

- http://www.tum.de/die-tum/aktuelles/pressemitteilungen/kurz/article/32242

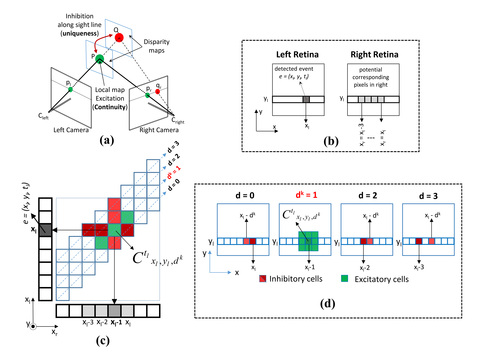

Cooperative Event-based Stereo Matching

In this work we propose an asynchronous event-based stereo matching algorithm for Event-based Dynamic Vision Sensors. The main idea of the proposed algorithm is grounded in the cooperative computing principle, which was first proposed by Marr in the 80s [1]. The classic cooperative approach for stereoscopic fusion operates on static features in two images. The question we address in this research is how to formulate an event-driven cooperative process to deal with dynamic spatio-temporal descriptive features provided by Dynamic Vision Sensors. To combine temporal correlations of the events with physical constraints, we have added a computationally simple internal dynamics into the network, such that each single cell can achieve temporal sensitivity to the events. Consequently, the competitive and cooperative activity of distributed dynamic cells can facilitate a mechanism to extract a global spatio-temporal correlation for incoming events. The figure shows the schematic architecture of the proposed network, in which each cell Cxyd represents the internal belief in the correspondence of pixel pairs (x, y) in the left and (x-d, y) in the right sensor. [2].

Contact: Mohsen Firouzi

- D. Marr, “Vision, A computational Investigation into the Human Representation and Processing of Visual Information” edited by Lucia M. Vaina, MIT press, 2010, pp. 111-122 (2010)

- M. Firouzi, J. Conradt, “Asynchronous Event-based Cooperative Stereo Matching Using Neuromorphic Silicon Retinas”, Neural Processing Letters 43(2), pp 311-326 · April 2016

Event-based spatio-temporal pattern matching

In this project, we develop a method to extract distance information about objects in the environment from two vision information sources (stereo vision) under limited computing resources and power supply in real-time. To achieve this, we make use of the advantages of Dynamic Vision Sensors (DVS). Compared to conventional cameras, which sample all pixels with a fixed frequency, DVS pixel work independently and only generate vision information as soon as they sense a change in brightness. Everywhere in the observed scene where no change happens no data is generated. This working principle results in a extremely low-latency, sparse data stream that carries non-redundant relevant information and requires almost no pre-processing.

To find the distance to objects we collect vision information from two DVS with overlapping field of view. An observed physical object will leave very similar spatio-temporal patterns in both DVS data streams. By identifying and matching these patterns and applying additional geometric constraints, the distance of the observed object can be inferred via the disparity (relative spatial shift) of the patterns on the two retinas. Eventually, we want to use this stereo method on autonomous systems to allow them to navigate independently and to avoid collisions with obstacles along their way.

Contact: Lukas Everding

High Speed Embedded Reactive Vision

Pen Balancing / Pendulum Tracking

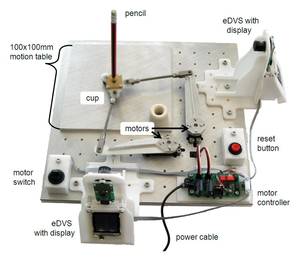

Animals by far outperform current technology when reacting to visual stimuli in low processing requirements, demonstrating astonishingly fast reaction times to changes. Current real-time vision based robotic control approaches, in contrast, typically require high computational resources to extract relevant information from sequences of images provided by a video camera. As an example, robotic pole balancing with large objects is a well known exercise in current robotics research, but balancing arbitrary small poles (such as a pencil, which is too small for a human to balance) has not yet been achieved due to limitations in vision processing.

Using a Dynamic Vision Sensor (DVS) which reports individual events, we developed a demonstration in which we addressed the problem of balancing an arbitrary standard pencil [1-2]. A stereo pair of embedded DVS reports vision events caused by the moving pencil, which is standing on its tip on an actuated table. Then our processing algorithm extracts the pencil position and angle without ever using a "full scene" visual representation, but simply by processing only the spikes relevant to the pencil's motion.

Contact: Jörg Conradt, Cristian Axenie

- Conradt J., Berner R., Cook M., Delbruck T. (2009). An Embedded AER Dynamic Vision Sensor for Low-Latency Pole Balancing. IEEE Workshop on Embedded Computer Vision (ECV09), 1-6, Tokyo, Japan.

- Conradt J., Cook M., Berner R., Lichtsteiner P., Douglas RJ., Delbruck T. (2009). A Pencil Balancing Robot using a Pair of AER Dynamic Vision Sensors. International Conference on Circuits and Systems (ISCAS), 781-785.

Automotive / Industrial Applications

Production industry systems, such as in traditional factory lines or autonomous systems within “industry 4.0” context, often need to respond to a visual percept within milliseconds. Often traditional camera technology offers high resolution images, but does not allow to interpret data and to respond within the available time before a decision is required (e.g. about the quality of a product, or the exact position of an object). In such an industrial production context we explore event-based vision sensors [1-2] with low latency and low-bandwidth data for (ultimately autonomous) real-time closed-loop control of the current production process. Embedded in industry production lines, such vision technology allows drastic improvements of the quality of products and the speed of production.

Contact: Lukas Everding, Michael Lutter

- Müller GR., Conradt J. (2012). Self-calibrating Marker Tracking in 3D with Event-Based Vision Sensors, International Conf. on Artificial Neural Networks (ICANN), pages 313-321, Lausanne, Switzerland.

- Müller GR., Conradt J. (2011). A Miniature Low-Power Sensor System for Real Time 2D Visual Tracking of LED Markers, Proceedings of the IEEE International Conference on Robotics and Biomimetics (IEEE-ROBIO), pages 2429-35, Phuket, Thailand.

Autonomous Mobile Robotics

Simultaneous-Localization And Mapping

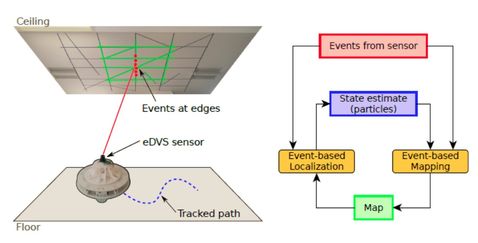

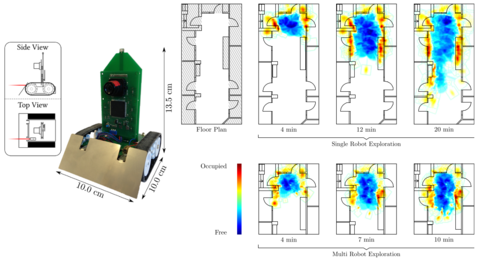

Mapping an environment and simultaneously estimating where an agent is within this environment (simultaneous localization and mapping, SLAM) is a topic which has a very long research tradition. Remarkable results have been presented in the recent past, where mobile robots were equipped with powerful computers to both compute landmarks from standard visual input and update their maps. Especially successful were probabilistic methods, as presented in [1], which - as drawback - require huge amounts of computing power. Here, we conduct research towards more energy and memory efficient algorithms based on event-based visual sensory signals. We develop real-time particle-filter based 2D SLAM models that run on-board of small autonomous robots to map unknown environments [2], which is currently extended into autonomous 3D SLAM.

Furthermore we research how to employ such methods on very small robots with only limited capabilities. This means that our robots are only equipped with micro-controllers, and low-power vision sensors that operate akin biological retinas. They are able to communicate with each other using wireless communication infrastructures, mapping an environment as a team of robots [3].

Contact: David Weikersdorf, Jörg Conradt, Nicolai Waniek

- Sebastian Thrun, Wolfram Burgard, and Dieter Fox. 2005. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents). The MIT Press.

- Weikersdorfer, D.; Hoffmann, R.; Conradt, J.: Simultaneous Localization and Mapping for event-based Vision Systems. ICVS 2013, 133-142.

- Waniek, N.; Biedermann, J.; Conradt, J.: Cooperative SLAM on Small Mobile Robots. IEEE-ROBIO 2015, 2015.

Autonomous Embedded Robotics

Most algorithms for small robotics assume or require external resources to solve computations, and focus only on a single robot. During this project we research methods how to efficiently use the local computation capabilities of small robots, in combination with efficient input sensory modalities, namely the dynamic vision sensor. This approach allows us to reduce the computational overhead of many algorithms and reduce the computational complexity, hence making the computations suitable for neuromorphic on-board hardware [1] or even for single embedded micro-controllers. Furthermore we seek to understand how multiple small robots could collaboratively, but still autonomously operate. For instance, mapping a large environment could be parallelized by multiple robots which cooperatively solve simultaneous localization and mapping (SLAM) [2].

Contact: Nicolai Waniek

- J. Conradt, F. Galluppi, TC. Stewart: Trainable sensorimotor mapping in a neuromorphic robot. Robotics and Autonomous Systems, 2014

- N. Waniek, J. Biedermann, J. Conradt: Cooperative SLAM on Small Mobile Robots. IEEE-ROBIO 2015, 2015

High Speed Lightweight Airborne Robots

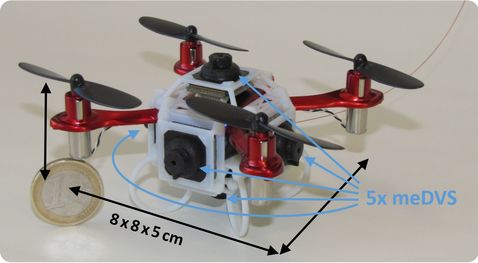

Flying animals predominantly use wide-field vision as sensory source, which is unavailable in today’s miniature flying robots because of expensive video image analysis (typically GPU based algorithms). Event-based dynamic vision sensors (DVS) offer fantastic opportunities for high-speed miniaturized operation under severe weight, computing power, and real-time constraints. We develop a fully autonomous miniature flying robot that processes neuromorphic vision in real-time on-board, resulting from a two-staged engineering effort:

- Development of a miniaturized embedded Dynamic Vision Sensor (meDVS) for immediate real-time on-board processing of vision events (e.g. optic flow, object tracking).

- System integration on a tiny autonomous flying robot containing multiple meDVS for stand-alone real-time neuromorphic flight at fast control updates, despite severely limited on-board computing and power resources.

The Neuromorphic Mini Quadcopter (8x8x5cm, ~44g) is based on a modified RC toy (JXD385) with custom open source on-board flight stabilization firmware. An independent on-board miniaturized main control board allows real-time implementation of neuromorphic algorithms, e.g. event-based autonomous simultaneous localization and mapping (SLAM, see above). The main control CPU interfaces up to 5 orthogonally mounted mini DVS to obtain raw or preprocessed vision events; and directs the flight control board, and optionally communicates through high-speed WLAN with a remote PC for debug purposes. The Neuromorphic Mini Quadcopter can be controlled through a user RC remote control (which overrides on-board control) or act completely autonomous based on on-board real-time vision processing algorithms. Ultimately, we envision a complete swarm of such mini quadcopters to quickly explore and map unknown indoor environments.

Contact: Jörg Conradt