Information Processing

in Distributed Neuronal Circuits

Interacting Cortical Maps

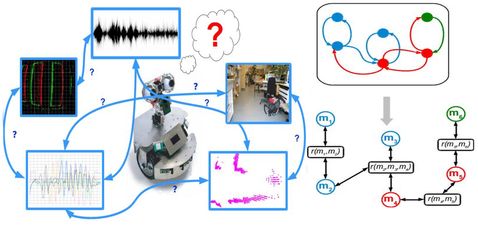

In this project we are developing an alternative, neurobiologically inspired method for real-time interpretation of sensory stimuli in mobile robotic systems: a distributed networked system with inter-merged information storage and processing that allows efficient parallel reasoning. This networked architecture is comprised of interconnected heterogeneous software units, each encoding a different feature about the state of the environment that is represented by a local representation. Such extracted pieces of environmental knowledge interact by mutual influence to ensure overall system coherence.

Sample instantiations of such a system focus on mobile robotics [1]. In order to obtain a robust and unambiguous description of a robot’s current orientation within its environment available sensory cues are fused. Given available data, the network relaxes to a globally consistent estimate of the robot's state, similar to the scenario in [2].

Without precise modelling and parametrization of the system model, such a system is able to combine information from multiple sensors into a global estimate, more precise than individual estimates, in complex multisensory fusion scenarios [3]. By distributing computation, such that each unit processes and stores only local information, and using only basic mathematical relations, complexity is reduced.

Given its generality, computational efficacy, and ease of implementation our approach is a promising candidate for a large range of cognitive robotic applications [4]. Highly parallelizable our approach suitable for implementations on massively parallel hardware architectures for real-time robotics applications.

Contact: Cristian Axenie

- C. Axenie, J. Conradt, Cortically Inspired Sensor Fusion Network for Mobile Robot Heading Estimation, International Conference on Artificial Neural Networks (ICANN), 2013.

- M. Cook, L. Gugelmann, F. Jug, C. Krautz, A. Steger, Interacting Maps for Visual Interpretation, International Joint Conference on Neural Networks (IJCNN), 2011.

- C. Axenie, J. Conradt, Cortically inspired sensor fusion network for mobile robot egomotion estimation, Robotics and Autonomous Systems (2014)

- I. Susnea, C. Axenie, Cognitive Maps for Indirect Coordination of Intelligent Agents, Informatics and Control Vol. 24 (2015)

Multisensory Perception and Inference

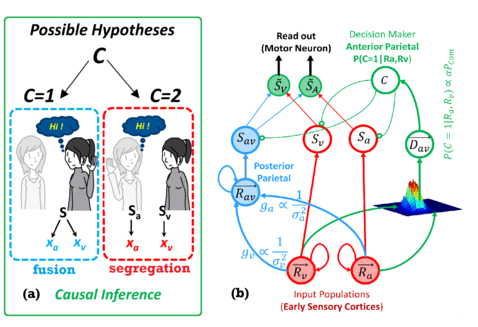

Having a stable and unified perception of the world, requires combining different representations of the environment across the partially reliable sensory observations. Bayesian Decision Theory has successfully formulated the underlying process of sensory integration in a wide range of human multisensory perceptual tasks [1]. When sensory signals are generated by separate sources, fusion is not rational anymore; for instance we never combine the sound of mewing with the picture of a dog. Since we are constantly surrounded by multiple sources of information in real world, in addition to fusion, information segregation is also a vital perceptual process.

Taking into account all possible hypotheses and the problem of whether to fuse information or not, involves a probabilistic inference process called Causal Inference (Fig.a) [2]. It is still not fully understood where and through which mechanisms Causal Inference is emerged in the Cortex. But recently it is hypothesized that a hierarchical distributed circuit including parietal and early sensory cortices generates this process [2]. In this work and through a neural modeling approach, we have demonstrated how such a distributed cortical hierarchy (Fig.b) can perform probabilistic causal inference and reproduce human behavior [3].

Contact: Mohsen Firouzi

- D. Alais, and D. Burr, (2004). The ventriloquist effect results from near-optimal bimodal integration, Curr. Biol. 14, pp 257–262.

- T. Rohe, U. Noppeney, (2015) Cortical Hierarchies Perform Bayesian Causal Inference in Multisensory Perception. PLoS Biol 13:e1002073..

- M. Firouzi, S. Glasauer, and J. Conradt (2015) Causal Bayesian Inference in hierarchical distributed computation in the Cortex, towards a neural model, Bernstein Conference on Computational Neuroscience, Heidelberg, 2015

Spatial Representations

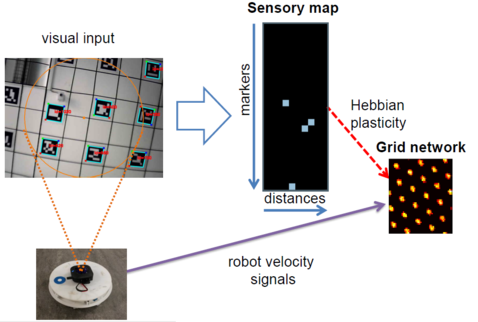

The recent discovery of grid cells in the rodent brain [1] have sparked an interest in understanding the spatial representation in neural networks. Especially, as the grid cells have now been reported to be present in the human brain as well [2]. These cells play a crucial role in the spatial navigation of rodents. Gaining knowledge about their parallel processing of information to solve navigational tasks may help solving navigation problems on robots [3] in a parallel asynchronous and power efficient way using novel neuromorphic hardware. Ultimately, this may lead to general principles of massively parallel algorithms which operate on locally distributed information in an asynchronous fashion.

Contact: Marcello Mulas, Nicolai Waniek

- Stensola H, Stensola T, Solstad T, Froland K, Moser MB, Moser El (2012) The entorhinal grid map is discretized, Nature.

- Jacobs J, Weidemann C, Miller J, Solway A, Burke J, Wei X, Suthana N, et al (2013) Direct Recordings of Grid-like Neuronal Activity in Human Spatial Navigation.” Nature Neuroscience 16, no. 9: 1188–1190.

- M. Mulas, N. Waniek, and J. Conradt, Hebbian plasticity realigns grid cell activity with external sensory cues in continuous attractor models, Frontiers in Computational Neuroscience, Vol 10, 2016.

Distributed Graphical Networks for Control

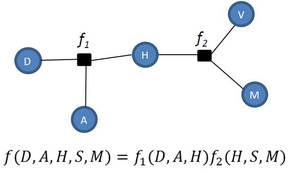

Human cognitive abilities by far exceed those of today’s most advanced computer systems. Understanding such human cognitive reasoning would bring algorithmic development a large leap forward towards truly cognitive abilities, such as learning from prior experience while interacting in a noisily observable environment. So far, however, imitations of the human brain in algorithms (such as artificial neural networks) have only been partially successful. What has often been neglected in neurobiological modeling is that neither the brain’s computing substrate nor the brain’s computing algorithms developed independently of each other. In this research project, we use graphical models (e.g. Factor Graphs [1]) combined with computational learning techniques to mimic cognitive processing of the forebrain [2] on distributed hardware that is particularly well matched to implement such computations [1,3].

Contact: Indar Sugiarto, Jörg Conradt

- Sugiarto I., Conradt J. (2014) Factor Graph Inference Engine on the SpiNNaker Neural Computing System. International Conference on Artificial Neural Networks (ICANN), p. 161-68, Hamburg, Germany.

- Sugiarto I., Maier P., Conradt J. (2013) Reasoning with discrete factor graph. International Conference on Robotics, Biomimetics, Intelligent Computational Systems, p. 170-5, Yogyakarta, Indonesia.

- Sugiarto, I. and Conradt, J. (2016) Design and Evaluation of a Factor-graph-based Inference Engine On a System-On-Chip (SoC), Microprocessors and Microsystems Journal, Elsevier, under review.