Workshop on Neuromorphic Vision Sensors and Event-based Information Processing for Robotics

Workshop Organizers: Jörg Conradt, Cristian Axenie, Nicolai Waniek

Aim and Methods

Understanding the computational principles behind how brains turn perception into behavior is one of the challenging research questions for the upcoming decades. The NST group at “Technische Universität München” (TUM) investigates theory, models, and applied robotic implementations of distributed neuronal information processing, to (a) discover key principles by which large networks of neurons operate and (b) implement those in engineered systems to enhance their real-world performance. Within this research effort, we provide an experimentation environment for (I) hardware developers, who integrate their sensors into real world applications; (II) algorithm developers and modelers, who can validate their models; and (III) engineers such as roboticists, who explore and exploit alternative control techniques for technical systems. See here for more details.

This hands-on workgroup will explore alternative ways to integrate sensory information from both "classical" and “neuromorphic” devices in real time, to close the perception-cognition-action loop in challenging robot control tasks. The main goal of this workgroup is to give students hands-on insight into neural processing algorithms and in analyzing robotic behavior in real-world scenarios.

For this workshop, we will provide neuromorphic vision sensors (eDVS) on-board of small mobile robots, which are connected to student PCs through WLAN. A user-extendable software framework allows the control of individual robots; participants can easily add custom functionality based on real-time sensor data, e.g. event-based vision information for obstacle avoidance. The provided programming infrastructure is flexible and abstracts from low-level hardware details, thereby allowing application developers to plug-in (neuromorphic) control algorithms and to explore and exploit the event-based information processing paradigm.

Provided Platform

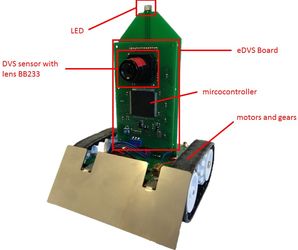

Multiple robots (PushBot) equipped with neuromorphic vision sensor (eDVS):

- Tank-like actuated mobile robot, size 10x10cm (base), 14cm (height)

- max speed approximately human walking speed

- operating on 4 x AA battery, operation time >= 2h

- WiFi connectivity for motor control and all on-board sensor data

- Sensors:

- eDVS (128x128 event-based neuromorphic vision sensors), field-of-view ~80 º hor/ver

- inertial (accelerometer, gyroscope) and magnetic

- Actuators:

- Two motors for tank-like driving

- Laser-pointer facing forward (e.g. for distance estimates)

- 2 LED on top, together 360º radiation

- Buzzer (loudspeaker, frequency 30Hz – 10kHz)

Resources

- Software infrastructure (C++ code base) to be downloaded

- Tutorial on eDVS for event-based algorithms in applications, such as real-time optic flow or visual object tracking

- Tutorial on PushBot control

Preliminary Project Ideas

- Target tracking and following (hand-moved or robot chain)

- Multi-robot coordination (achieving a common goal)

- Trajectory Stabilization (e.g. optic flow or line following)

- Other motor control and planning aspects